Last week, I was the proud owner of a most-excellent Bluetooth headset, the Jawbone ICON. Compared to my years-old Motorola H700, the Jawbone was simply outstanding. I had owned this beautiful piece of technology less than 3 months, and I was still enjoying its newness, along with its excellent audio quality.

Unfortunately, my puppy Nimbus felt that he, too, should have the privilege of playing with a Jawbone. As it turns out, Bluetooth headsets are not very durable as a chew-toy:

In a desperate plea for empathy, I tweeted my Horrible Headset Happenstance to the world:

The great folks at Jawbone Customer Support heard (ok, saw) my plea, along with the photo, and reached out to me. They told me they laughed when they saw my mutilated headset (and assured me they wept a bit, too). They assumed my dog must be really cute to be able to get away with something this mischievous and still live to bark about it. I assured them he was:

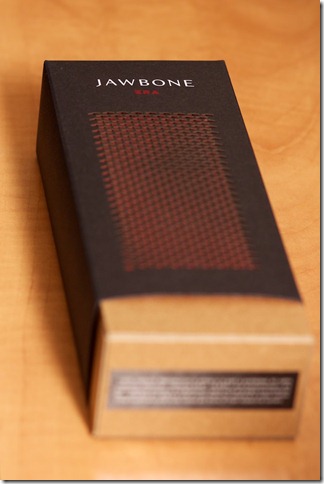

Graciously, Customer Support offered to assist me in my replacement quest. I decided to eschew (no, not chew) another ICON, and upgrade to the brand-newest Jawbone: The ERA. And much to my surprise, it arrived in the mail today. I can’t imagine these photos do it justice, but I wanted to share my unboxing experience. First, the box itself:

After staring at it for an undetermined amount of time, it was time to open it up:

And peeling back the front cover revealed a cornucopia of ear-fitting goodness.

I gently removed the ERA from its perch and gave it a full charge. It was time… Time for its maiden voyage. I called my wife, listening intensely to the tonal quality of her phone ringing across the airwaves. She picked up, and I asked her to say something that I could quote to the world. “Anything,” I said. “Just lay it on me.” And she responded, so eloquently and with zero distortion:

“That’s what she said.”